XGBoost Multi-class Example¶

[1]:

import sklearn

from sklearn.model_selection import train_test_split

import numpy as np

import shap

import time

import xgboost

X_train,X_test,Y_train,Y_test = train_test_split(*shap.datasets.iris(), test_size=0.2, random_state=0)

shap.initjs()

[2]:

model = xgboost.XGBClassifier(objective="binary:logistic", max_depth=4, n_estimators=10)

model.fit(X_train, Y_train)

[2]:

XGBClassifier(base_score=0.5, booster='gbtree', colsample_bylevel=1,

colsample_bytree=1, gamma=0, learning_rate=0.1, max_delta_step=0,

max_depth=4, min_child_weight=1, missing=None, n_estimators=10,

n_jobs=1, nthread=None, objective='multi:softprob', random_state=0,

reg_alpha=0, reg_lambda=1, scale_pos_weight=1, seed=None,

silent=True, subsample=1)

[3]:

shap_values = shap.TreeExplainer(model).shap_values(X_test)

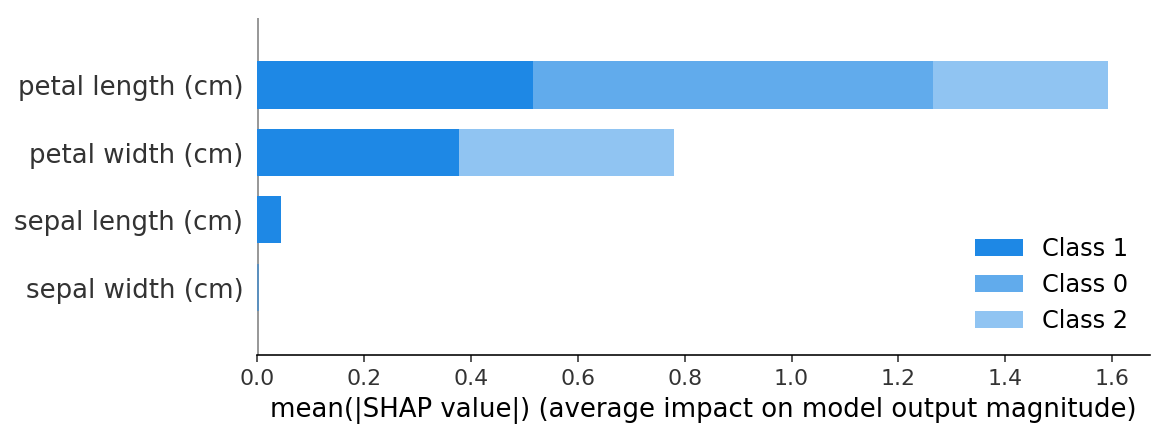

shap.summary_plot(shap_values, X_test)